Aztec Assistant (AztecA) Research and Development Project Summary

Introduction

In Fall 2019 SDSU faculty, along with Instructional Technology Services (ITS) staff and student assistants, began exploring artificial intelligence to innovate on universally beneficial accessibility, efficiency, and convenience in classroom settings. During the early months of the COVID-19 pandemic the ITS team identified a need for a hands free interface for controlling classroom equipment, which built upon existing needs for faculty for whom physical reach presented an accessibility challenge. After initial research we could not find a satisfactory system that was usable at the scale and with the range of equipment of the classrooms we manage. ITS created a proposal to develop a proof of concept in an instructional space to determine the feasibility of this type of a hands free interface at a large scale.

Goals

The goal of the AztecA project was to design a hands free interface for controlling equipment in a classroom and determine its viability for deployment in SDSU classrooms at a large scale.

Reasons for pursuing this goal:

- Decreasing faculty contact with shared technology surfaces (e.g., control panels, light switches, presentation mode)

- Establishing assessments to measure faculty accessibility, confidence, and overall user experience (QR code link to faculty survey)

- Informing, improving, and expanding handsfree technologies and practices in the California State University (CSU) and education communities

- Protecting faculty and students from classroom contagions (e.g., COVID-19, common colds, etc.)

- Adding additional accessible options for users with disabilities or other circumstances that would make it difficult or impossible to use the touch interfaces

- Providing convenience for users that want a more flexible, dynamic approach to controlling classroom technologies

Approach

We decided to use voice control as a method of interfacing with the classroom's equipment. Ideally, the user should be able to control any technology in the room that they would normally be able to control through touch by issuing verbal commands.

Due to the scale of our goal we decided to rely on consumer voice recognition systems for our language processing. This would also save resources developing our own voice recognition system and allow us to leverage progress made by prominent third party companies such as Amazon. Amazon’s consumer voice service is called Alexa and it is capable of running on a variety of consumer hardware provided by Amazon. For this project we decided to use the Amazon Echo Dot because of its relatively low cost and profile.

Initial Challenges:

- How is the Alexa device going to communicate with classroom equipment?

- How can we ensure that each device is going to be able to connect only with the equipment found in the room that it's currently in?

- How can the system account for differences in equipment in certain rooms?

- How can the system recognize the wide range of commands that users may attempt?

- How will the Alexa device know how to respond to an user when feedback is dependent on the state of equipment in the room?

- How can we maintain the privacy of users while using a third party device that connects to the cloud?

- How will we teach users how to interface verbally with the system?

In developing our model we took inspiration from several sources and tried to find a solution that could potentially scale to the number of rooms we have at SDSU.

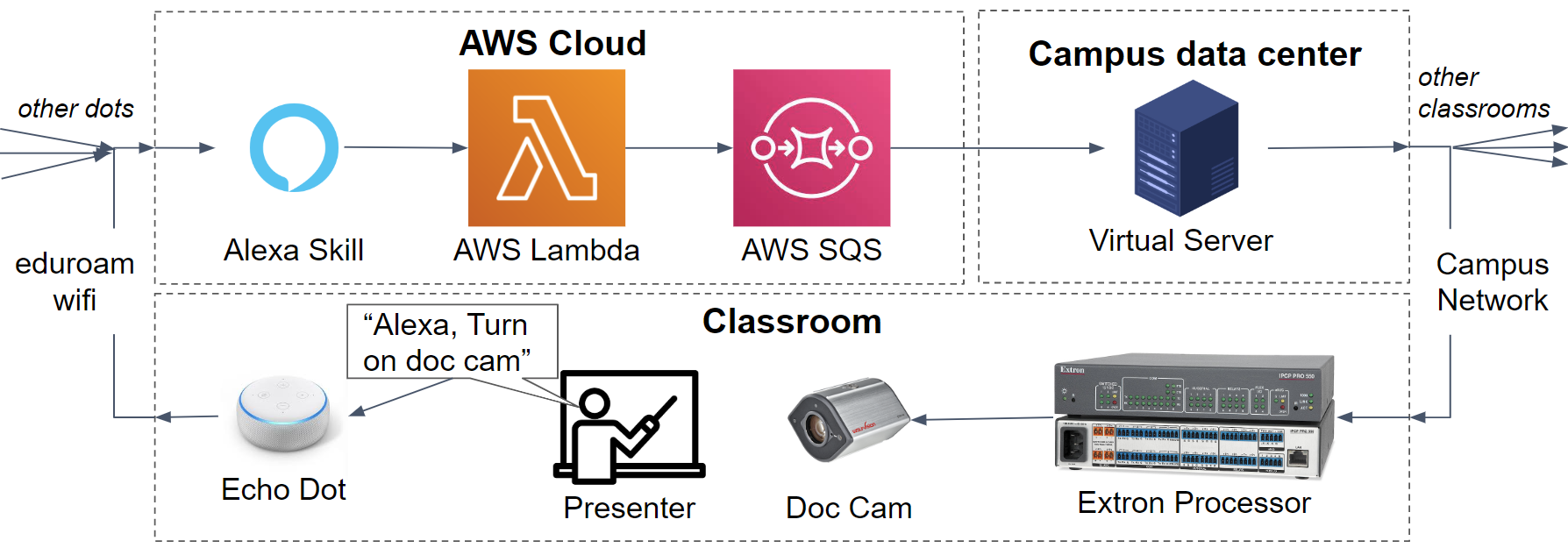

The echo dot relies on listening for a “wake word” from users before it begins to

parse a statement. Once a user uses that wake word the statement can be processed

with the Alexa Skill in the cloud. The skill will determine what the user wants the

classroom equipment to do. The command will travel through the cloud to a server on

SDSU’s campus that has network access to the control processor in the correct room.

The control processor will handle the command as if the equivalent button had been

pressed on the touch interface.

Problems and Solutions with Our Model

- The on campus data center is a bottleneck for all the echo devices on campus. If anything should happen to the server in the data center that would slow down or stop its process all the echo devices on campus would be affected.

- Proposed Solution: We theorized that having redundant servers running the same process would add reliability in case of a server failure.

- Voice commands must take a rigid format defined by the skill making it difficult for users to know which commands they can give and how to give them

- Solution: Amazon’s Alexa skill builder can accept multiple possible statements to trigger each command which can be interpolated automatically to cover even more statements.

- The echo dot can only control devices connected to the control processor which excludes devices like lighting which would require an upgrade to be connected to the Alexa system.

- Proposed Solution: Upgrading light switches and other classroom equipment with off the shelf IOT products could allow a connection to an Echo Dot in the room.

- The system is difficult to deploy and set up in each room because we would need to write new code for each room’s control processor. We would also need to add an entry to the server that routes the correct commands to the correct room.

- Solution: We wrote code for the control processors modularly to make it easier to deploy to multiple rooms with different specifications.

- The system contains multiple security vulnerabilities

- The command audio coming from the user is exposed to the cloud.

- Classroom technology which has previously been accessible from only on campus sources can now be accessed from the cloud through the on campus server.

- Solution: Campus firewalls can prevent unintended connections and the AWS pipeline can be restricted to require a secret key.

- The system does not have a way to return a response from the classroom to the cloud which prevents the echo dot from having a custom response to the user depending on the status of their command

Implementation

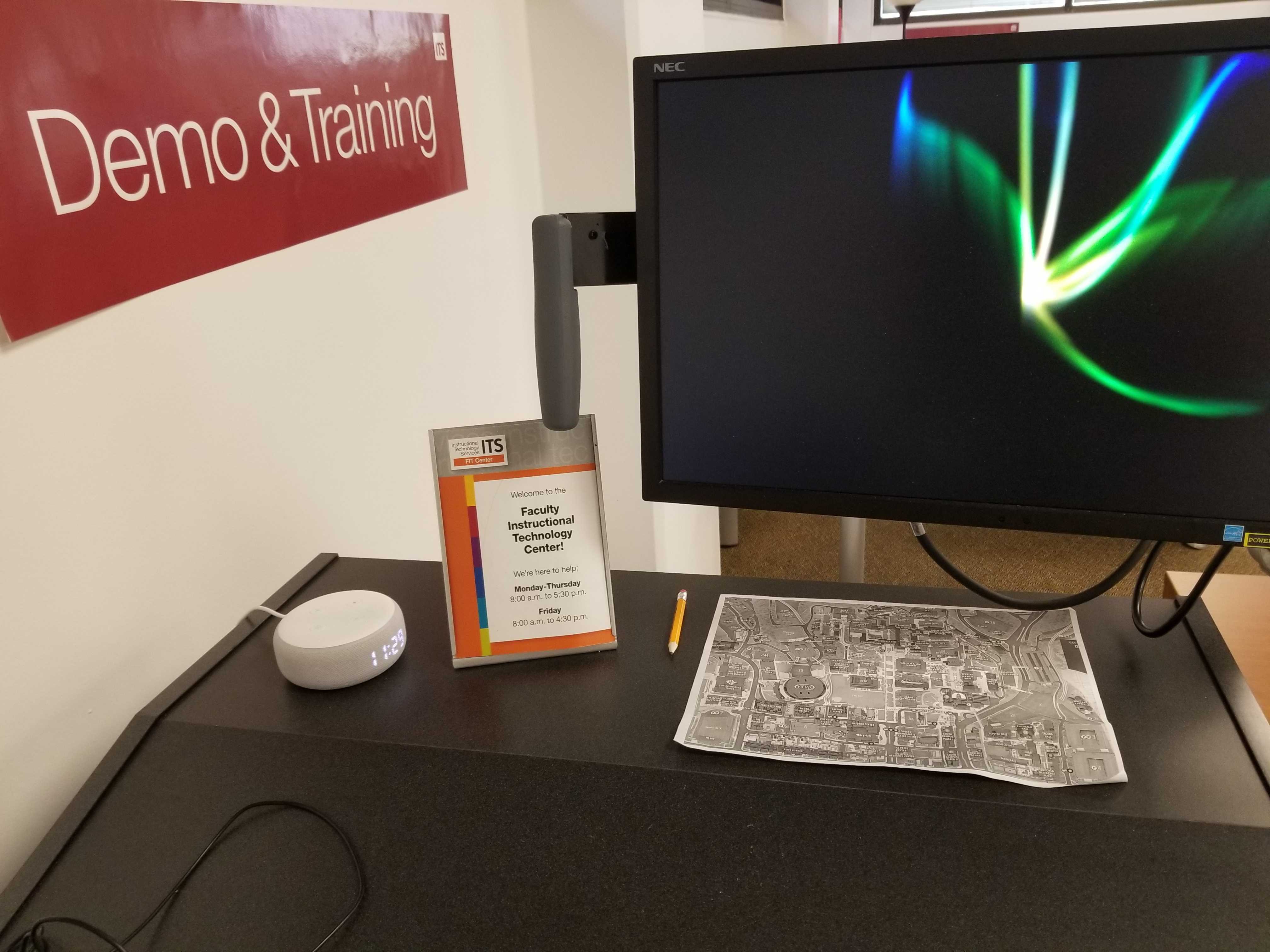

To test the efficacy of our model we set up a prototype of the system. The prototype was located at a replica classroom podium ITS uses for testing and training. Most of the technology that is included in a standard connected classroom at SDSU is included in this podium.

We were able to set up all the steps listed in our model with the exception of the on campus server. The role of this server was played by an on campus computer that was connected directly to the processor over ethernet. We programmed the control processor in the room to accept commands from the local computer. We achieved touch equivalent controls for the projector screen, podium mac, podium pc, usb switcher, projector on/off, and display switcher. While this left out options to control other important devices in our classrooms we felt this was a broad enough range of functionality to assess the viability of the system at a larger scale. A set of instructions that listed what devices could be controlled and what verbal commands could be used was displayed alongside the Echo Dot device.

Results

The proof of concept was successful in accomplishing the technical capabilities we had established at the beginning of the project. However, we came to the conclusion that further developing and deploying the system would be too time costly for the benefits it would provide. However, in developing this system we uncovered findings and skills that have been useful in other classroom improvement efforts and future development of hands free systems. In assessing the viability of the system we developed we consulted with potential faculty users, instructional designers, learning environments technicians who would help to support and install the system into more rooms, and university administrators. We tested the system to reveal problems and areas for improvement. Through the course of developing this project we have only found more desire for a system that accomplishes the goals of this project. While we may not have succeeded in accomplishing all of our goals we have gained and shared information that will be useful for progress in the field of hands free user interfaces in the future.

Main Takeaways

- The AztecA Prototype showed initial success in terms of functionality. The system was responsive and easy to use. Multiple possible phrases for each command were accounted for and with a reference sheet it was easy for the user to find any command they wanted. There was high accuracy in recognizing each command correctly. The prototype accomplished our goal of providing an intuitive voice interface for most equipment in the room.

- There is a significant accessibility gap created when the only means of interfacing with equipment is touch based. A voice interface system helps provide more options for using technology in a room making all of that technology more accessible.

- A commercial voice recognition device that does not require internet connection and can relay commands directly to Extron control processors would greatly increase the viability of this project

- Artificial intelligence can be utilized to interpret voice commands into a finite set of operations that can be executed by a classroom's control processor. Future developments in this area will make the interface more reactive and adaptable, increasing the benefit of a system with these goals.

- A future system aiming to accomplish these goals should be opt-in only and should not collect, send, or store any information on an external system.

- In future classroom developments, a standard needs to be created for connecting classroom equipment with the internet in a safe and secure way.

- Classroom control systems can be created in a standardized way that makes it easier to deploy new equipment on a large scale. This includes modular, well documented hardware and software configurations.

Resources

https://github.com/sdsu-its/Azteca

This repository contains development code for the server and development global scripter files for the control processor

This is the documentation of the first brainstorming meeting for this project